#120 – Audrey Tang on what we can learn from Taiwan’s experiments with how to do democracy

In 2014 Taiwan was rocked by mass protests against a proposed trade agreement with China that was about to be agreed without the usual Parliamentary hearings. Students invaded and took over the Parlia...

2 Helmi 20222h 5min

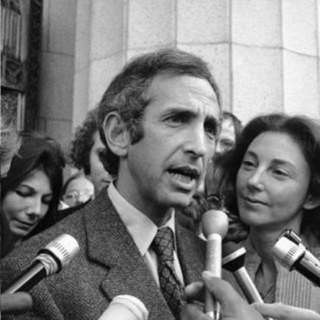

#43 Classic episode - Daniel Ellsberg on the institutional insanity that maintains nuclear doomsday machines

Rebroadcast: this episode was originally released in September 2018.In Stanley Kubrick’s iconic film Dr. Strangelove, the American president is informed that the Soviet Union has created a secret dete...

18 Tammi 20222h 35min

#35 Classic episode - Tara Mac Aulay on the audacity to fix the world without asking permission

Rebroadcast: this episode was originally released in June 2018. How broken is the world? How inefficient is a typical organisation? Looking at Tara Mac Aulay’s life, the answer seems to be ‘very’. A...

10 Tammi 20221h 23min

#67 Classic episode – David Chalmers on the nature and ethics of consciousness

Rebroadcast: this episode was originally released in December 2019. What is it like to be you right now? You're seeing this text on the screen, smelling the coffee next to you, and feeling the warmth...

3 Tammi 20224h 42min

#59 Classic episode - Cass Sunstein on how change happens, and why it's so often abrupt & unpredictable

Rebroadcast: this episode was originally released in June 2019. It can often feel hopeless to be an activist seeking social change on an obscure issue where most people seem opposed or at best indiff...

27 Joulu 20211h 43min

#119 – Andrew Yang on our very long-term future, and other topics most politicians won’t touch

Andrew Yang — past presidential candidate, founder of the Forward Party, and leader of the 'Yang Gang' — is kind of a big deal, but is particularly popular among listeners to The 80,000 Hours Podcast....

20 Joulu 20211h 25min

#118 – Jaime Yassif on safeguarding bioscience to prevent catastrophic lab accidents and bioweapons development

If a rich country were really committed to pursuing an active biological weapons program, there’s not much we could do to stop them. With enough money and persistence, they’d be able to buy equipment,...

13 Joulu 20212h 15min

#117 – David Denkenberger on using paper mills and seaweed to feed everyone in a catastrophe, ft Sahil Shah

If there's a nuclear war followed by nuclear winter, and the sun is blocked out for years, most of us are going to starve, right? Well, currently, probably we would, because humanity hasn't done much ...

29 Marras 20213h 8min