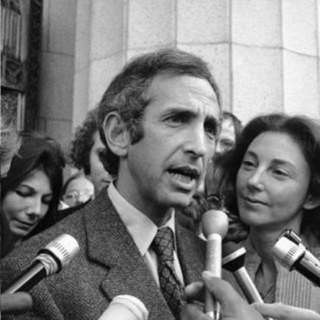

#43 - Daniel Ellsberg on the institutional insanity that maintains nuclear doomsday machines

In Stanley Kubrick’s iconic film Dr. Strangelove, the American president is informed that the Soviet Union has created a secret deterrence system which will automatically wipe out humanity upon detect...

25 Syys 20182h 44min

#42 - Amanda Askell on moral empathy, the value of information & the ethics of infinity

Consider two familiar moments at a family reunion. Our host, Uncle Bill, takes pride in his barbecuing skills. But his niece Becky says that she now refuses to eat meat. A groan goes round the table; ...

11 Syys 20182h 46min

#41 - David Roodman on incarceration, geomagnetic storms, & becoming a world-class researcher

With 698 inmates per 100,000 citizens, the U.S. is by far the leader among large wealthy nations in incarceration. But what effect does imprisonment actually have on crime? According to David Roodman...

28 Elo 20182h 18min

#40 - Katja Grace on forecasting future technology & how much we should trust expert predictions

Experts believe that artificial intelligence will be better than humans at driving trucks by 2027, working in retail by 2031, writing bestselling books by 2049, and working as surgeons by 2053. But ho...

21 Elo 20182h 11min

#39 - Spencer Greenberg on the scientific approach to solving difficult everyday questions

Will Trump be re-elected? Will North Korea give up their nuclear weapons? Will your friend turn up to dinner? Spencer Greenberg, founder of ClearerThinking.org has a process for working out such real...

7 Elo 20182h 17min

#38 - Yew-Kwang Ng on anticipating effective altruism decades ago & how to make a much happier world

Will people who think carefully about how to maximize welfare eventually converge on the same views? The effective altruism community has spent a lot of time over the past 10 years debating how best t...

26 Heinä 20181h 59min

#37 - GiveWell picks top charities by estimating the unknowable. James Snowden on how they do it.

What’s the value of preventing the death of a 5-year-old child, compared to a 20-year-old, or an 80-year-old? The global health community has generally regarded the value as proportional to the numbe...

16 Heinä 20181h 44min

#36 - Tanya Singh on ending the operations management bottleneck in effective altruism

Almost nobody is able to do groundbreaking physics research themselves, and by the time his brilliance was appreciated, Einstein was hardly limited by funding. But what if you could find a way to unlo...

11 Heinä 20182h 4min