GraphRAG: Knowledge Graphs for AI Applications with Kirk Marple - #681

Today we're joined by Kirk Marple, CEO and founder of Graphlit, to explore the emerging paradigm of "GraphRAG," or Graph Retrieval Augmented Generation. In our conversation, Kirk digs into the GraphRA...

22 Huhti 202447min

Teaching Large Language Models to Reason with Reinforcement Learning with Alex Havrilla - #680

Today we're joined by Alex Havrilla, a PhD student at Georgia Tech, to discuss "Teaching Large Language Models to Reason with Reinforcement Learning." Alex discusses the role of creativity and explora...

16 Huhti 202446min

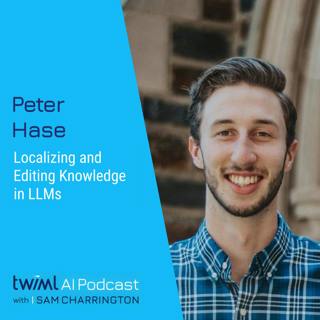

Localizing and Editing Knowledge in LLMs with Peter Hase - #679

Today we're joined by Peter Hase, a fifth-year PhD student at the University of North Carolina NLP lab. We discuss "scalable oversight", and the importance of developing a deeper understanding of how ...

8 Huhti 202449min

Coercing LLMs to Do and Reveal (Almost) Anything with Jonas Geiping - #678

Today we're joined by Jonas Geiping, a research group leader at the ELLIS Institute, to explore his paper: "Coercing LLMs to Do and Reveal (Almost) Anything". Jonas explains how neural networks can be...

1 Huhti 202448min

V-JEPA, AI Reasoning from a Non-Generative Architecture with Mido Assran - #677

Today we’re joined by Mido Assran, a research scientist at Meta’s Fundamental AI Research (FAIR). In this conversation, we discuss V-JEPA, a new model being billed as “the next step in Yann LeCun's vi...

25 Maalis 202447min

Video as a Universal Interface for AI Reasoning with Sherry Yang - #676

Today we’re joined by Sherry Yang, senior research scientist at Google DeepMind and a PhD student at UC Berkeley. In this interview, we discuss her new paper, "Video as the New Language for Real-World...

18 Maalis 202449min

Assessing the Risks of Open AI Models with Sayash Kapoor - #675

Today we’re joined by Sayash Kapoor, a Ph.D. student in the Department of Computer Science at Princeton University. Sayash walks us through his paper: "On the Societal Impact of Open Foundation Models...

11 Maalis 202440min

OLMo: Everything You Need to Train an Open Source LLM with Akshita Bhagia - #674

Today we’re joined by Akshita Bhagia, a senior research engineer at the Allen Institute for AI. Akshita joins us to discuss OLMo, a new open source language model with 7 billion and 1 billion variants...

4 Maalis 202432min