My experience with imposter syndrome — and how to (partly) overcome it (Article)

Today’s release is a reading of our article called My experience with imposter syndrome — and how to (partly) overcome it, written and narrated by Luisa Rodriguez. If you want to check out the links...

8 Joulu 202244min

Rob's thoughts on the FTX bankruptcy

In this episode, usual host of the show Rob Wiblin gives his thoughts on the recent collapse of FTX. Click here for an official 80,000 Hours statement. And here are links to some potentially relev...

23 Marras 20225min

#140 – Bear Braumoeller on the case that war isn't in decline

Is war in long-term decline? Steven Pinker's The Better Angels of Our Nature brought this previously obscure academic question to the centre of public debate, and pointed to rates of death in war to a...

8 Marras 20222h 47min

#139 – Alan Hájek on puzzles and paradoxes in probability and expected value

A casino offers you a game. A coin will be tossed. If it comes up heads on the first flip you win $2. If it comes up on the second flip you win $4. If it comes up on the third you win $8, the fourth y...

28 Loka 20223h 38min

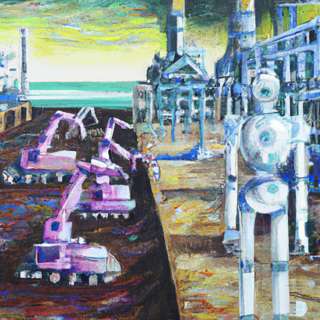

Preventing an AI-related catastrophe (Article)

Today’s release is a professional reading of our new problem profile on preventing an AI-related catastrophe, written by Benjamin Hilton. We expect that there will be substantial progress in AI in t...

14 Loka 20222h 24min

#138 – Sharon Hewitt Rawlette on why pleasure and pain are the only things that intrinsically matter

What in the world is intrinsically good — good in itself even if it has no other effects? Over the millennia, people have offered many answers: joy, justice, equality, accomplishment, loving god, wisd...

30 Syys 20222h 24min

#137 – Andreas Mogensen on whether effective altruism is just for consequentialists

Effective altruism, in a slogan, aims to 'do the most good.' Utilitarianism, in a slogan, says we should act to 'produce the greatest good for the greatest number.' It's clear enough why utilitarians ...

8 Syys 20222h 21min

#136 – Will MacAskill on what we owe the future

People who exist in the future deserve some degree of moral consideration.The future could be very big, very long, and/or very good.We can reasonably hope to influence whether people in the future exi...

15 Elo 20222h 54min