#220 – Ryan Greenblatt on the 4 most likely ways for AI to take over, and the case for and against AGI in <8 years

Ryan Greenblatt — lead author on the explosive paper “Alignment faking in large language models” and chief scientist at Redwood Research — thinks there’s a 25% chance that within four years, AI will b...

8 Heinä 20252h 50min

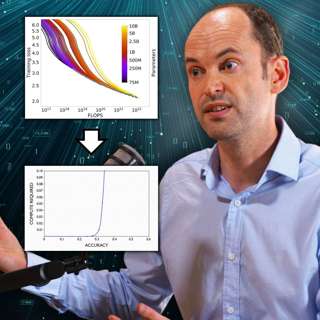

#219 – Toby Ord on graphs AI companies would prefer you didn't (fully) understand

The era of making AI smarter just by making it bigger is ending. But that doesn’t mean progress is slowing down — far from it. AI models continue to get much more powerful, just using very different m...

24 Kesä 20252h 48min

#218 – Hugh White on why Trump is abandoning US hegemony – and that’s probably good

For decades, US allies have slept soundly under the protection of America’s overwhelming military might. Donald Trump — with his threats to ditch NATO, seize Greenland, and abandon Taiwan — seems hell...

12 Kesä 20252h 48min

#217 – Beth Barnes on the most important graph in AI right now — and the 7-month rule that governs its progress

AI models today have a 50% chance of successfully completing a task that would take an expert human one hour. Seven months ago, that number was roughly 30 minutes — and seven months before that, 15 mi...

2 Kesä 20253h 47min

Beyond human minds: The bewildering frontier of consciousness in insects, AI, and more

What if there’s something it’s like to be a shrimp — or a chatbot?For centuries, humans have debated the nature of consciousness, often placing ourselves at the very top. But what about the minds of o...

23 Touko 20253h 34min

Don’t believe OpenAI’s “nonprofit” spin (emergency pod with Tyler Whitmer)

OpenAI’s recent announcement that its nonprofit would “retain control” of its for-profit business sounds reassuring. But this seemingly major concession, celebrated by so many, is in itself largely me...

15 Touko 20251h 12min

The case for and against AGI by 2030 (article by Benjamin Todd)

More and more people have been saying that we might have AGI (artificial general intelligence) before 2030. Is that really plausible? This article by Benjamin Todd looks into the cases for and against...

12 Touko 20251h

Emergency pod: Did OpenAI give up, or is this just a new trap? (with Rose Chan Loui)

When attorneys general intervene in corporate affairs, it usually means something has gone seriously wrong. In OpenAI’s case, it appears to have forced a dramatic reversal of the company’s plans to si...

8 Touko 20251h 2min