Black Boxes Are Not Required

Deep neural networks are undeniably effective. They rely on such a high number of parameters, that they are appropriately described as "black boxes". While black boxes lack desirably properties like interpretability and explainability, in some cases, their accuracy makes them incredibly useful. But does achiving "usefulness" require a black box? Can we be sure an equally valid but simpler solution does not exist? Cynthia Rudin helps us answer that question. We discuss her recent paper with co-author Joanna Radin titled (spoiler warning)… Why Are We Using Black Box Models in AI When We Don't Need To? A Lesson From An Explainable AI Competition

5 Juni 202032min

Robustness to Unforeseen Adversarial Attacks

Daniel Kang joins us to discuss the paper Testing Robustness Against Unforeseen Adversaries.

30 Maj 202021min

Estimating the Size of Language Acquisition

Frank Mollica joins us to discuss the paper Humans store about 1.5 megabytes of information during language acquisition

22 Maj 202025min

Interpretable AI in Healthcare

Jayaraman Thiagarajan joins us to discuss the recent paper Calibrating Healthcare AI: Towards Reliable and Interpretable Deep Predictive Models.

15 Maj 202035min

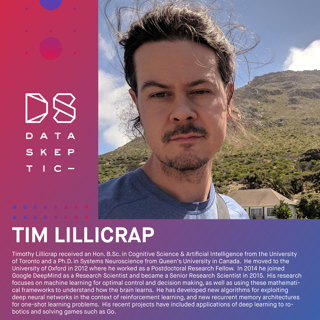

Understanding Neural Networks

What does it mean to understand a neural network? That's the question posted on this arXiv paper. Kyle speaks with Tim Lillicrap about this and several other big questions.

8 Maj 202034min

Self-Explaining AI

Dan Elton joins us to discuss self-explaining AI. What could be better than an interpretable model? How about a model wich explains itself in a conversational way, engaging in a back and forth with the user. We discuss the paper Self-explaining AI as an alternative to interpretable AI which presents a framework for self-explainging AI.

2 Maj 202032min

Plastic Bag Bans

Becca Taylor joins us to discuss her work studying the impact of plastic bag bans as published in Bag Leakage: The Effect of Disposable Carryout Bag Regulations on Unregulated Bags from the Journal of Environmental Economics and Management. How does one measure the impact of these bans? Are they achieving their intended goals? Join us and find out!

24 Apr 202034min

Self Driving Cars and Pedestrians

We are joined by Arash Kalatian to discuss Decoding pedestrian and automated vehicle interactions using immersive virtual reality and interpretable deep learning.

18 Apr 202030min