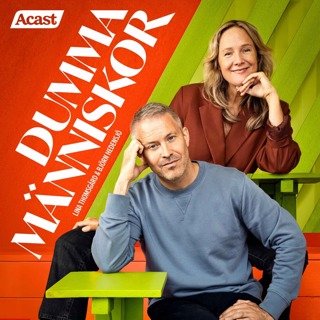

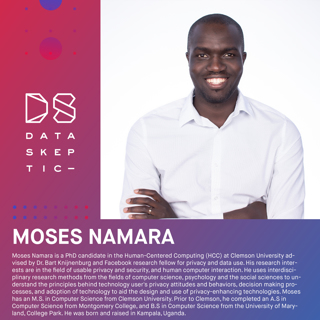

Human Computer Interaction and Online Privacy

Moses Namara from the HATLab joins us to discuss his research into the interaction between privacy and human-computer interaction.

27 Juli 202032min

Authorship Attribution of Lennon McCartney Songs

Mark Glickman joins us to discuss the paper Data in the Life: Authorship Attribution in Lennon-McCartney Songs.

20 Juli 202033min

GANs Can Be Interpretable

Erik Härkönen joins us to discuss the paper GANSpace: Discovering Interpretable GAN Controls. During the interview, Kyle makes reference to this amazing interpretable GAN controls video and it's accompanying codebase found here. Erik mentions the GANspace collab notebook which is a rapid way to try these ideas out for yourself.

11 Juli 202026min

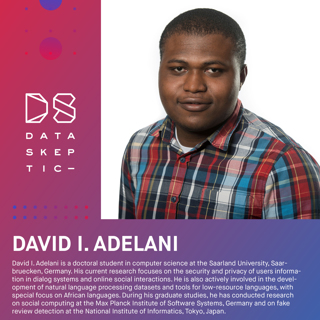

Sentiment Preserving Fake Reviews

David Ifeoluwa Adelani joins us to discuss Generating Sentiment-Preserving Fake Online Reviews Using Neural Language Models and Their Human- and Machine-based Detection.

6 Juli 202028min

Interpretability Practitioners

Sungsoo Ray Hong joins us to discuss the paper Human Factors in Model Interpretability: Industry Practices, Challenges, and Needs.

26 Juni 202032min

Facial Recognition Auditing

Deb Raji joins us to discuss her recent publication Saving Face: Investigating the Ethical Concerns of Facial Recognition Auditing.

19 Juni 202047min

Robust Fit to Nature

Uri Hasson joins us this week to discuss the paper Robust-fit to Nature: An Evolutionary Perspective on Biological (and Artificial) Neural Networks.

12 Juni 202038min

Black Boxes Are Not Required

Deep neural networks are undeniably effective. They rely on such a high number of parameters, that they are appropriately described as "black boxes". While black boxes lack desirably properties like interpretability and explainability, in some cases, their accuracy makes them incredibly useful. But does achiving "usefulness" require a black box? Can we be sure an equally valid but simpler solution does not exist? Cynthia Rudin helps us answer that question. We discuss her recent paper with co-author Joanna Radin titled (spoiler warning)… Why Are We Using Black Box Models in AI When We Don't Need To? A Lesson From An Explainable AI Competition

5 Juni 202032min