#222 – Can we tell if an AI is loyal by reading its mind? DeepMind's Neel Nanda (part 1)

We don’t know how AIs think or why they do what they do. Or at least, we don’t know much. That fact is only becoming more troubling as AIs grow more capable and appear on track to wield enormous cultu...

8 Syys 20253h 1min

#221 – Kyle Fish on the most bizarre findings from 5 AI welfare experiments

What happens when you lock two AI systems in a room together and tell them they can discuss anything they want?According to experiments run by Kyle Fish — Anthropic’s first AI welfare researcher — som...

28 Elo 20252h 28min

How not to lose your job to AI (article by Benjamin Todd)

About half of people are worried they’ll lose their job to AI. They’re right to be concerned: AI can now complete real-world coding tasks on GitHub, generate photorealistic video, drive a taxi more sa...

31 Heinä 202551min

Rebuilding after apocalypse: What 13 experts say about bouncing back

What happens when civilisation faces its greatest tests?This compilation brings together insights from researchers, defence experts, philosophers, and policymakers on humanity’s ability to survive and...

15 Heinä 20254h 26min

#220 – Ryan Greenblatt on the 4 most likely ways for AI to take over, and the case for and against AGI in <8 years

Ryan Greenblatt — lead author on the explosive paper “Alignment faking in large language models” and chief scientist at Redwood Research — thinks there’s a 25% chance that within four years, AI will b...

8 Heinä 20252h 50min

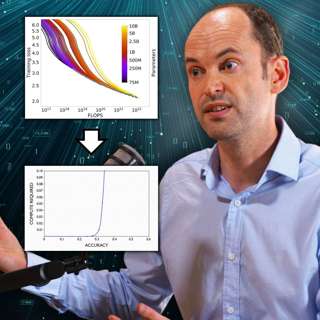

#219 – Toby Ord on graphs AI companies would prefer you didn't (fully) understand

The era of making AI smarter just by making it bigger is ending. But that doesn’t mean progress is slowing down — far from it. AI models continue to get much more powerful, just using very different m...

24 Kesä 20252h 48min

#218 – Hugh White on why Trump is abandoning US hegemony – and that’s probably good

For decades, US allies have slept soundly under the protection of America’s overwhelming military might. Donald Trump — with his threats to ditch NATO, seize Greenland, and abandon Taiwan — seems hell...

12 Kesä 20252h 48min

#217 – Beth Barnes on the most important graph in AI right now — and the 7-month rule that governs its progress

AI models today have a 50% chance of successfully completing a task that would take an expert human one hour. Seven months ago, that number was roughly 30 minutes — and seven months before that, 15 mi...

2 Kesä 20253h 47min