#46 - Hilary Greaves on moral cluelessness & tackling crucial questions in academia

The barista gives you your coffee and change, and you walk away from the busy line. But you suddenly realise she gave you $1 less than she should have. Do you brush your way past the people now waitin...

23 Okt 20182h 49min

#45 - Tyler Cowen's case for maximising econ growth, stabilising civilization & thinking long-term

I've probably spent more time reading Tyler Cowen - Professor of Economics at George Mason University - than any other author. Indeed it's his incredibly popular blog Marginal Revolution that prompted...

17 Okt 20182h 30min

#44 - Paul Christiano on how we'll hand the future off to AI, & solving the alignment problem

Paul Christiano is one of the smartest people I know. After our first session produced such great material, we decided to do a second recording, resulting in our longest interview so far. While challe...

2 Okt 20183h 51min

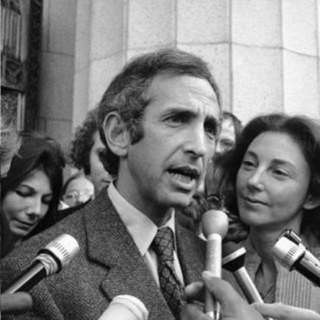

#43 - Daniel Ellsberg on the institutional insanity that maintains nuclear doomsday machines

In Stanley Kubrick’s iconic film Dr. Strangelove, the American president is informed that the Soviet Union has created a secret deterrence system which will automatically wipe out humanity upon detect...

25 Sep 20182h 44min

#42 - Amanda Askell on moral empathy, the value of information & the ethics of infinity

Consider two familiar moments at a family reunion. Our host, Uncle Bill, takes pride in his barbecuing skills. But his niece Becky says that she now refuses to eat meat. A groan goes round the table; ...

11 Sep 20182h 46min

#41 - David Roodman on incarceration, geomagnetic storms, & becoming a world-class researcher

With 698 inmates per 100,000 citizens, the U.S. is by far the leader among large wealthy nations in incarceration. But what effect does imprisonment actually have on crime? According to David Roodman...

28 Aug 20182h 18min

#40 - Katja Grace on forecasting future technology & how much we should trust expert predictions

Experts believe that artificial intelligence will be better than humans at driving trucks by 2027, working in retail by 2031, writing bestselling books by 2049, and working as surgeons by 2053. But ho...

21 Aug 20182h 11min

#39 - Spencer Greenberg on the scientific approach to solving difficult everyday questions

Will Trump be re-elected? Will North Korea give up their nuclear weapons? Will your friend turn up to dinner? Spencer Greenberg, founder of ClearerThinking.org has a process for working out such real...

7 Aug 20182h 17min