#75 – Michelle Hutchinson on what people most often ask 80,000 Hours

Since it was founded, 80,000 Hours has done one-on-one calls to supplement our online content and offer more personalised advice. We try to help people get clear on their most plausible paths, the key...

28 Huhti 20202h 13min

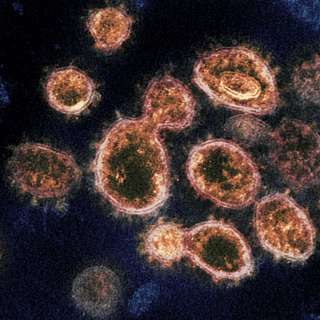

#74 – Dr Greg Lewis on COVID-19 & catastrophic biological risks

Our lives currently revolve around the global emergency of COVID-19; you’re probably reading this while confined to your house, as the death toll from the worst pandemic since 1918 continues to rise. ...

17 Huhti 20202h 37min

Article: Reducing global catastrophic biological risks

In a few days we'll be putting out a conversation with Dr Greg Lewis, who studies how to prevent global catastrophic biological risks at Oxford's Future of Humanity Institute. Greg also wrote a new ...

15 Huhti 20201h 4min

Emergency episode: Rob & Howie on the menace of COVID-19, and what both governments & individuals might do to help

From home isolation Rob and Howie just recorded an episode on: 1. How many could die in the crisis, and the risk to your health personally. 2. What individuals might be able to do help tackle the coro...

19 Maalis 20201h 52min

#73 – Phil Trammell on patient philanthropy and waiting to do good

To do good, most of us look to use our time and money to affect the world around us today. But perhaps that's all wrong. If you took $1,000 you were going to donate and instead put it in the stock mar...

17 Maalis 20202h 35min

#72 - Toby Ord on the precipice and humanity's potential futures

This week Oxford academic and 80,000 Hours trustee Dr Toby Ord released his new book The Precipice: Existential Risk and the Future of Humanity. It's about how our long-term future could be better tha...

7 Maalis 20203h 14min

#71 - Benjamin Todd on the key ideas of 80,000 Hours

The 80,000 Hours Podcast is about “the world’s most pressing problems and how you can use your career to solve them”, and in this episode we tackle that question in the most direct way possible. Las...

2 Maalis 20202h 57min

Arden & Rob on demandingness, work-life balance & injustice (80k team chat #1)

Today's bonus episode of the podcast is a quick conversation between me and my fellow 80,000 Hours researcher Arden Koehler about a few topics, including the demandingness of morality, work-life balan...

25 Helmi 202044min