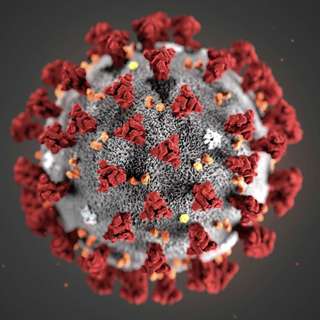

#70 - Dr Cassidy Nelson on the 12 best ways to stop the next pandemic (and limit nCoV)

nCoV is alarming governments and citizens around the world. It has killed more than 1,000 people, brought the Chinese economy to a standstill, and continues to show up in more and more places. But bad...

13 Helmi 20202h 26min

#69 – Jeffrey Ding on China, its AI dream, and what we get wrong about both

The State Council of China's 2017 AI plan was the starting point of China’s AI planning; China’s approach to AI is defined by its top-down and monolithic nature; China is winning the AI arms race; and...

6 Helmi 20201h 37min

Rob & Howie on what we do and don't know about 2019-nCoV

Two 80,000 Hours researchers, Robert Wiblin and Howie Lempel, record an experimental bonus episode about the new 2019-nCoV virus.See this list of resources, including many discussed in the episode, to...

3 Helmi 20201h 18min

#68 - Will MacAskill on the paralysis argument, whether we're at the hinge of history, & his new priorities

You’re given a box with a set of dice in it. If you roll an even number, a person's life is saved. If you roll an odd number, someone else will die. Each time you shake the box you get $10. Should you...

24 Tammi 20203h 25min

#44 Classic episode - Paul Christiano on finding real solutions to the AI alignment problem

Rebroadcast: this episode was originally released in October 2018. Paul Christiano is one of the smartest people I know. After our first session produced such great material, we decided to do a seco...

15 Tammi 20203h 51min

#33 Classic episode - Anders Sandberg on cryonics, solar flares, and the annual odds of nuclear war

Rebroadcast: this episode was originally released in May 2018. Joseph Stalin had a life-extension program dedicated to making himself immortal. What if he had succeeded? According to Bryan Caplan ...

8 Tammi 20201h 25min

#17 Classic episode - Will MacAskill on moral uncertainty, utilitarianism & how to avoid being a moral monster

Rebroadcast: this episode was originally released in January 2018. Immanuel Kant is a profoundly influential figure in modern philosophy, and was one of the earliest proponents for universal democracy...

31 Joulu 20191h 52min

#67 – David Chalmers on the nature and ethics of consciousness

What is it like to be you right now? You're seeing this text on the screen, smelling the coffee next to you, and feeling the warmth of the cup. There’s a lot going on in your head — your conscious exp...

16 Joulu 20194h 41min