#227 – Helen Toner on the geopolitics of AGI in China and the Middle East

With the US racing to develop AGI and superintelligence ahead of China, you might expect the two countries to be negotiating how they’ll deploy AI, including in the military, without coming to blows. ...

5 Nov 20252h 20min

#226 – Holden Karnofsky on unexploited opportunities to make AI safer — and all his AGI takes

For years, working on AI safety usually meant theorising about the ‘alignment problem’ or trying to convince other people to give a damn. If you could find any way to help, the work was frustrating an...

30 Okt 20254h 30min

#225 – Daniel Kokotajlo on what a hyperspeed robot economy might look like

When Daniel Kokotajlo talks to security experts at major AI labs, they tell him something chilling: “Of course we’re probably penetrated by the CCP already, and if they really wanted something, they c...

27 Okt 20252h 12min

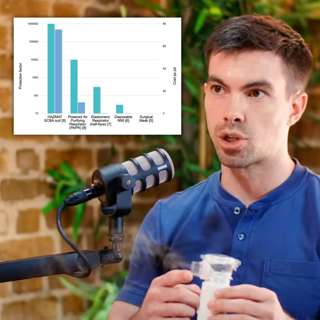

#224 – There's a cheap and low-tech way to save humanity from any engineered disease | Andrew Snyder-Beattie

Conventional wisdom is that safeguarding humanity from the worst biological risks — microbes optimised to kill as many as possible — is difficult bordering on impossible, making bioweapons humanity’s ...

2 Okt 20252h 31min

Inside the Biden admin’s AI policy approach | Jake Sullivan, Biden’s NSA | via The Cognitive Revolution

Jake Sullivan was the US National Security Advisor from 2021-2025. He joined our friends on The Cognitive Revolution podcast in August to discuss AI as a critical national security issue. We thought i...

26 Sep 20251h 5min

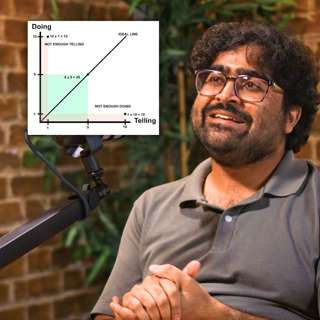

#223 – Neel Nanda on leading a Google DeepMind team at 26 – and advice if you want to work at an AI company (part 2)

At 26, Neel Nanda leads an AI safety team at Google DeepMind, has published dozens of influential papers, and mentored 50 junior researchers — seven of whom now work at major AI companies. His secret?...

15 Sep 20251h 46min

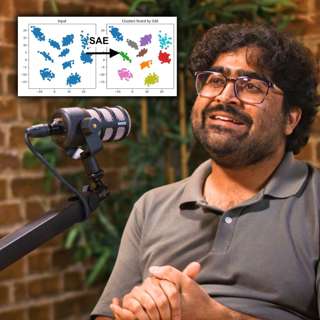

#222 – Can we tell if an AI is loyal by reading its mind? DeepMind's Neel Nanda (part 1)

We don’t know how AIs think or why they do what they do. Or at least, we don’t know much. That fact is only becoming more troubling as AIs grow more capable and appear on track to wield enormous cultu...

8 Sep 20253h 1min

#221 – Kyle Fish on the most bizarre findings from 5 AI welfare experiments

What happens when you lock two AI systems in a room together and tell them they can discuss anything they want?According to experiments run by Kyle Fish — Anthropic’s first AI welfare researcher — som...

28 Aug 20252h 28min