#220 – Ryan Greenblatt on the 4 most likely ways for AI to take over, and the case for and against AGI in <8 years

Ryan Greenblatt — lead author on the explosive paper “Alignment faking in large language models” and chief scientist at Redwood Research — thinks there’s a 25% chance that within four years, AI will be able to do everything needed to run an AI company, from writing code to designing experiments to making strategic and business decisions.As Ryan lays out, AI models are “marching through the human regime”: systems that could handle five-minute tasks two years ago now tackle 90-minute projects. Double that a few more times and we may be automating full jobs rather than just parts of them.Will setting AI to improve itself lead to an explosive positive feedback loop? Maybe, but maybe not.The explosive scenario: Once you’ve automated your AI company, you could have the equivalent of 20,000 top researchers, each working 50 times faster than humans with total focus. “You have your AIs, they do a bunch of algorithmic research, they train a new AI, that new AI is smarter and better and more efficient… that new AI does even faster algorithmic research.” In this world, we could see years of AI progress compressed into months or even weeks.With AIs now doing all of the work of programming their successors and blowing past the human level, Ryan thinks it would be fairly straightforward for them to take over and disempower humanity, if they thought doing so would better achieve their goals. In the interview he lays out the four most likely approaches for them to take.The linear progress scenario: You automate your company but progress barely accelerates. Why? Multiple reasons, but the most likely is “it could just be that AI R&D research bottlenecks extremely hard on compute.” You’ve got brilliant AI researchers, but they’re all waiting for experiments to run on the same limited set of chips, so can only make modest progress.Ryan’s median guess splits the difference: perhaps a 20x acceleration that lasts for a few months or years. Transformative, but less extreme than some in the AI companies imagine.And his 25th percentile case? Progress “just barely faster” than before. All that automation, and all you’ve been able to do is keep pace.Unfortunately the data we can observe today is so limited that it leaves us with vast error bars. “We’re extrapolating from a regime that we don’t even understand to a wildly different regime,” Ryan believes, “so no one knows.”But that huge uncertainty means the explosive growth scenario is a plausible one — and the companies building these systems are spending tens of billions to try to make it happen.In this extensive interview, Ryan elaborates on the above and the policy and technical response necessary to insure us against the possibility that they succeed — a scenario society has barely begun to prepare for.Summary, video, and full transcript: https://80k.info/rg25Recorded February 21, 2025.Chapters:Cold open (00:00:00)Who’s Ryan Greenblatt? (00:01:10)How close are we to automating AI R&D? (00:01:27)Really, though: how capable are today's models? (00:05:08)Why AI companies get automated earlier than others (00:12:35)Most likely ways for AGI to take over (00:17:37)Would AGI go rogue early or bide its time? (00:29:19)The “pause at human level” approach (00:34:02)AI control over AI alignment (00:45:38)Do we have to hope to catch AIs red-handed? (00:51:23)How would a slow AGI takeoff look? (00:55:33)Why might an intelligence explosion not happen for 8+ years? (01:03:32)Key challenges in forecasting AI progress (01:15:07)The bear case on AGI (01:23:01)The change to “compute at inference” (01:28:46)How much has pretraining petered out? (01:34:22)Could we get an intelligence explosion within a year? (01:46:36)Reasons AIs might struggle to replace humans (01:50:33)Things could go insanely fast when we automate AI R&D. Or not. (01:57:25)How fast would the intelligence explosion slow down? (02:11:48)Bottom line for mortals (02:24:33)Six orders of magnitude of progress... what does that even look like? (02:30:34)Neglected and important technical work people should be doing (02:40:32)What's the most promising work in governance? (02:44:32)Ryan's current research priorities (02:47:48)Tell us what you thought! https://forms.gle/hCjfcXGeLKxm5pLaAVideo editing: Luke Monsour, Simon Monsour, and Dominic ArmstrongAudio engineering: Ben Cordell, Milo McGuire, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

8 Jul 2h 50min

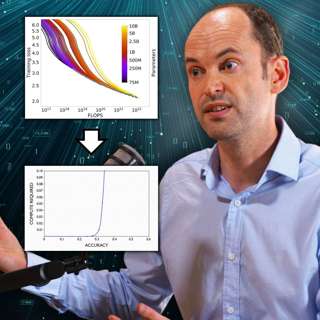

#219 – Toby Ord on graphs AI companies would prefer you didn't (fully) understand

The era of making AI smarter just by making it bigger is ending. But that doesn’t mean progress is slowing down — far from it. AI models continue to get much more powerful, just using very different methods, and those underlying technical changes force a big rethink of what coming years will look like.Toby Ord — Oxford philosopher and bestselling author of The Precipice — has been tracking these shifts and mapping out the implications both for governments and our lives.Links to learn more, video, highlights, and full transcript: https://80k.info/to25As he explains, until recently anyone can access the best AI in the world “for less than the price of a can of Coke.” But unfortunately, that’s over.What changed? AI companies first made models smarter by throwing a million times as much computing power at them during training, to make them better at predicting the next word. But with high quality data drying up, that approach petered out in 2024.So they pivoted to something radically different: instead of training smarter models, they’re giving existing models dramatically more time to think — leading to the rise in “reasoning models” that are at the frontier today.The results are impressive but this extra computing time comes at a cost: OpenAI’s o3 reasoning model achieved stunning results on a famous AI test by writing an Encyclopedia Britannica’s worth of reasoning to solve individual problems at a cost of over $1,000 per question.This isn’t just technical trivia: if this improvement method sticks, it will change much about how the AI revolution plays out, starting with the fact that we can expect the rich and powerful to get access to the best AI models well before the rest of us.Toby and host Rob discuss the implications of all that, plus the return of reinforcement learning (and resulting increase in deception), and Toby's commitment to clarifying the misleading graphs coming out of AI companies — to separate the snake oil and fads from the reality of what's likely a "transformative moment in human history."Recorded on May 23, 2025.Chapters:Cold open (00:00:00)Toby Ord is back — for a 4th time! (00:01:20)Everything has changed (and changed again) since 2020 (00:01:37)Is x-risk up or down? (00:07:47)The new scaling era: compute at inference (00:09:12)Inference scaling means less concentration (00:31:21)Will rich people get access to AGI first? Will the rest of us even know? (00:35:11)The new regime makes 'compute governance' harder (00:41:08)How 'IDA' might let AI blast past human level — or not (00:50:14)Reinforcement learning brings back 'reward hacking' agents (01:04:56)Will we get warning shots? Will they even help? (01:14:41)The scaling paradox (01:22:09)Misleading charts from AI companies (01:30:55)Policy debates should dream much bigger (01:43:04)Scientific moratoriums have worked before (01:56:04)Might AI 'go rogue' early on? (02:13:16)Lamps are regulated much more than AI (02:20:55)Companies made a strategic error shooting down SB 1047 (02:29:57)Companies should build in emergency brakes for their AI (02:35:49)Toby's bottom lines (02:44:32)Tell us what you thought! https://forms.gle/enUSk8HXiCrqSA9J8Video editing: Simon MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellCamera operator: Jeremy ChevillotteTranscriptions and web: Katy Moore

24 Jun 2h 48min

#218 – Hugh White on why Trump is abandoning US hegemony – and that’s probably good

For decades, US allies have slept soundly under the protection of America’s overwhelming military might. Donald Trump — with his threats to ditch NATO, seize Greenland, and abandon Taiwan — seems hell-bent on shattering that comfort.But according to Hugh White — one of the world's leading strategic thinkers, emeritus professor at the Australian National University, and author of Hard New World: Our Post-American Future — Trump isn't destroying American hegemony. He's simply revealing that it's already gone.Links to learn more, video, highlights, and full transcript: https://80k.info/hw“Trump has very little trouble accepting other great powers as co-equals,” Hugh explains. And that happens to align perfectly with a strategic reality the foreign policy establishment desperately wants to ignore: fundamental shifts in global power have made the costs of maintaining a US-led hegemony prohibitively high.Even under Biden, when Russia invaded Ukraine, the US sent weapons but explicitly ruled out direct involvement. Ukraine matters far more to Russia than America, and this “asymmetry of resolve” makes Putin’s nuclear threats credible where America’s counterthreats simply aren’t. Hugh’s gloomy prediction: “Europeans will end up conceding to Russia whatever they can’t convince the Russians they’re willing to fight a nuclear war to deny them.”The Pacific tells the same story. Despite Obama’s “pivot to Asia” and Biden’s tough talk about “winning the competition for the 21st century,” actual US military capabilities there have barely budged while China’s have soared, along with its economy — which is now bigger than the US’s, as measured in purchasing power. Containing China and defending Taiwan would require America to spend 8% of GDP on defence (versus 3.5% today) — and convince Beijing it’s willing to accept Los Angeles being vaporised.Unlike during the Cold War, no president — Trump or otherwise — can make that case to voters.Our new “multipolar” future, split between American, Chinese, Russian, Indian, and European spheres of influence, is a “darker world” than the golden age of US dominance. But Hugh’s message is blunt: for better or worse, 35 years of American hegemony are over. Recorded 30/5/2025.Chapters:00:00:00 Cold open00:01:25 US dominance is already gone00:03:26 US hegemony was the weird aberration00:13:08 Why the US bothered being the 'new Rome'00:23:25 Evidence the US is accepting the multipolar global order00:36:41 How Trump is advancing the inevitable00:43:21 Rubio explicitly favours this outcome00:45:42 Trump is half-right that the US was being ripped off00:50:14 It doesn't matter if the next president feels differently00:56:17 China's population is shrinking, but it doesn't matter01:06:07 Why Hugh disagrees with other realists like Mearsheimer01:10:52 Could the US be persuaded to spend 2x on defence?01:16:22 A multipolar world is bad, but better than nuclear war01:21:46 Will the US invade Panama? Greenland? Canada?!01:32:01 What should everyone else do to protect themselves in this new world?01:39:41 Europe is strong enough to take on Russia01:44:03 But the EU will need nuclear weapons01:48:34 Cancel (some) orders for US fighter planes01:53:40 Taiwan is screwed, even with its AI chips02:04:12 South Korea has to go nuclear too02:08:08 Japan will go nuclear, but can't be a regional leader02:11:44 Australia is defensible but needs a totally different military02:17:19 AGI may or may not overcome existing nuclear deterrence02:34:24 How right is realism?02:40:17 Has a country ever gone to war over morality alone?02:44:45 Hugh's message for Americans02:47:12 Why America temporarily stopped being isolationistTell us what you thought! https://forms.gle/AM91VzL4BDroEe6AAVideo editing: Simon MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

12 Jun 2h 48min

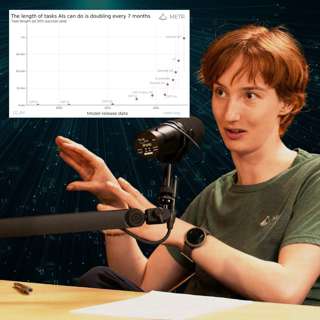

#217 – Beth Barnes on the most important graph in AI right now — and the 7-month rule that governs its progress

AI models today have a 50% chance of successfully completing a task that would take an expert human one hour. Seven months ago, that number was roughly 30 minutes — and seven months before that, 15 minutes. (See graph.)These are substantial, multi-step tasks requiring sustained focus: building web applications, conducting machine learning research, or solving complex programming challenges.Today’s guest, Beth Barnes, is CEO of METR (Model Evaluation & Threat Research) — the leading organisation measuring these capabilities.Links to learn more, video, highlights, and full transcript: https://80k.info/bbBeth's team has been timing how long it takes skilled humans to complete projects of varying length, then seeing how AI models perform on the same work. The resulting paper “Measuring AI ability to complete long tasks” made waves by revealing that the planning horizon of AI models was doubling roughly every seven months. It's regarded by many as the most useful AI forecasting work in years.Beth has found models can already do “meaningful work” improving themselves, and she wouldn’t be surprised if AI models were able to autonomously self-improve as little as two years from now — in fact, “It seems hard to rule out even shorter [timelines]. Is there 1% chance of this happening in six, nine months? Yeah, that seems pretty plausible.”Beth adds:The sense I really want to dispel is, “But the experts must be on top of this. The experts would be telling us if it really was time to freak out.” The experts are not on top of this. Inasmuch as there are experts, they are saying that this is a concerning risk. … And to the extent that I am an expert, I am an expert telling you you should freak out.What did you think of this episode? https://forms.gle/sFuDkoznxBcHPVmX6Chapters:Cold open (00:00:00)Who is Beth Barnes? (00:01:19)Can we see AI scheming in the chain of thought? (00:01:52)The chain of thought is essential for safety checking (00:08:58)Alignment faking in large language models (00:12:24)We have to test model honesty even before they're used inside AI companies (00:16:48)We have to test models when unruly and unconstrained (00:25:57)Each 7 months models can do tasks twice as long (00:30:40)METR's research finds AIs are solid at AI research already (00:49:33)AI may turn out to be strong at novel and creative research (00:55:53)When can we expect an algorithmic 'intelligence explosion'? (00:59:11)Recursively self-improving AI might even be here in two years — which is alarming (01:05:02)Could evaluations backfire by increasing AI hype and racing? (01:11:36)Governments first ignore new risks, but can overreact once they arrive (01:26:38)Do we need external auditors doing AI safety tests, not just the companies themselves? (01:35:10)A case against safety-focused people working at frontier AI companies (01:48:44)The new, more dire situation has forced changes to METR's strategy (02:02:29)AI companies are being locally reasonable, but globally reckless (02:10:31)Overrated: Interpretability research (02:15:11)Underrated: Developing more narrow AIs (02:17:01)Underrated: Helping humans judge confusing model outputs (02:23:36)Overrated: Major AI companies' contributions to safety research (02:25:52)Could we have a science of translating AI models' nonhuman language or neuralese? (02:29:24)Could we ban using AI to enhance AI, or is that just naive? (02:31:47)Open-weighting models is often good, and Beth has changed her attitude to it (02:37:52)What we can learn about AGI from the nuclear arms race (02:42:25)Infosec is so bad that no models are truly closed-weight models (02:57:24)AI is more like bioweapons because it undermines the leading power (03:02:02)What METR can do best that others can't (03:12:09)What METR isn't doing that other people have to step up and do (03:27:07)What research METR plans to do next (03:32:09)This episode was originally recorded on February 17, 2025.Video editing: Luke Monsour and Simon MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

2 Jun 3h 47min

Beyond human minds: The bewildering frontier of consciousness in insects, AI, and more

What if there’s something it’s like to be a shrimp — or a chatbot?For centuries, humans have debated the nature of consciousness, often placing ourselves at the very top. But what about the minds of others — both the animals we share this planet with and the artificial intelligences we’re creating?We’ve pulled together clips from past conversations with researchers and philosophers who’ve spent years trying to make sense of animal consciousness, artificial sentience, and moral consideration under deep uncertainty.Links to learn more and full transcript: https://80k.info/nhsChapters:Cold open (00:00:00)Luisa's intro (00:00:57)Robert Long on what we should picture when we think about artificial sentience (00:02:49)Jeff Sebo on what the threshold is for AI systems meriting moral consideration (00:07:22)Meghan Barrett on the evolutionary argument for insect sentience (00:11:24)Andrés Jiménez Zorrilla on whether there’s something it’s like to be a shrimp (00:15:09)Jonathan Birch on the cautionary tale of newborn pain (00:21:53)David Chalmers on why artificial consciousness is possible (00:26:12)Holden Karnofsky on how we’ll see digital people as... people (00:32:18)Jeff Sebo on grappling with our biases and ignorance when thinking about sentience (00:38:59)Bob Fischer on how to think about the moral weight of a chicken (00:49:37)Cameron Meyer Shorb on the range of suffering in wild animals (01:01:41)Sébastien Moro on whether fish are conscious or sentient (01:11:17)David Chalmers on when to start worrying about artificial consciousness (01:16:36)Robert Long on how we might stumble into causing AI systems enormous suffering (01:21:04)Jonathan Birch on how we might accidentally create artificial sentience (01:26:13)Anil Seth on which parts of the brain are required for consciousness (01:32:33)Peter Godfrey-Smith on uploads of ourselves (01:44:47)Jonathan Birch on treading lightly around the “edge cases” of sentience (02:00:12)Meghan Barrett on whether brain size and sentience are related (02:05:25)Lewis Bollard on how animal advocacy has changed in response to sentience studies (02:12:01)Bob Fischer on using proxies to determine sentience (02:22:27)Cameron Meyer Shorb on how we can practically study wild animals’ subjective experiences (02:26:28)Jeff Sebo on the problem of false positives in assessing artificial sentience (02:33:16)Stuart Russell on the moral rights of AIs (02:38:31)Buck Shlegeris on whether AI control strategies make humans the bad guys (02:41:50)Meghan Barrett on why she can’t be totally confident about insect sentience (02:47:12)Bob Fischer on what surprised him most about the findings of the Moral Weight Project (02:58:30)Jeff Sebo on why we’re likely to sleepwalk into causing massive amounts of suffering in AI systems (03:02:46)Will MacAskill on the rights of future digital beings (03:05:29)Carl Shulman on sharing the world with digital minds (03:19:25)Luisa's outro (03:33:43)Audio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongAdditional content editing: Katy Moore and Milo McGuireTranscriptions and web: Katy Moore

23 Mai 3h 34min

Don’t believe OpenAI’s “nonprofit” spin (emergency pod with Tyler Whitmer)

OpenAI’s recent announcement that its nonprofit would “retain control” of its for-profit business sounds reassuring. But this seemingly major concession, celebrated by so many, is in itself largely meaningless.Litigator Tyler Whitmer is a coauthor of a newly published letter that describes this attempted sleight of hand and directs regulators on how to stop it.As Tyler explains, the plan both before and after this announcement has been to convert OpenAI into a Delaware public benefit corporation (PBC) — and this alone will dramatically weaken the nonprofit’s ability to direct the business in pursuit of its charitable purpose: ensuring AGI is safe and “benefits all of humanity.”Right now, the nonprofit directly controls the business. But were OpenAI to become a PBC, the nonprofit, rather than having its “hand on the lever,” would merely contribute to the decision of who does.Why does this matter? Today, if OpenAI’s commercial arm were about to release an unhinged AI model that might make money but be bad for humanity, the nonprofit could directly intervene to stop it. In the proposed new structure, it likely couldn’t do much at all.But it’s even worse than that: even if the nonprofit could select the PBC’s directors, those directors would have fundamentally different legal obligations from those of the nonprofit. A PBC director must balance public benefit with the interests of profit-driven shareholders — by default, they cannot legally prioritise public interest over profits, even if they and the controlling shareholder that appointed them want to do so.As Tyler points out, there isn’t a single reported case of a shareholder successfully suing to enforce a PBC’s public benefit mission in the 10+ years since the Delaware PBC statute was enacted.This extra step from the nonprofit to the PBC would also mean that the attorneys general of California and Delaware — who today are empowered to ensure the nonprofit pursues its mission — would find themselves powerless to act. These are probably not side effects but rather a Trojan horse for-profit investors are trying to slip past regulators.Fortunately this can all be addressed — but it requires either the nonprofit board or the attorneys general of California and Delaware to promptly put their foot down and insist on watertight legal agreements that preserve OpenAI’s current governance safeguards and enforcement mechanisms.As Tyler explains, the same arrangements that currently bind the OpenAI business have to be written into a new PBC’s certificate of incorporation — something that won’t happen by default and that powerful investors have every incentive to resist.Full transcript and links to learn more: https://80k.info/twChapters:Cold open (00:00:00)Who’s Tyler Whitmer? (00:01:35)The new plan may be no improvement (00:02:04)The public hasn't even been allowed to know what they are owed (00:06:55)Issues beyond control (00:11:02)The new directors wouldn’t have to pursue the current purpose (00:12:06)The nonprofit might not even retain voting control (00:16:58)The attorneys general could lose their enforcement oversight (00:22:11)By default things go badly (00:29:09)How to keep the mission in the restructure (00:32:25)What will become of OpenAI’s Charter? (00:37:11)Ways to make things better, and not just avoid them getting worse (00:42:38)How the AGs can avoid being disempowered (00:48:35)Retaining the power to fire the CEO (00:54:49)Will the current board get a financial stake in OpenAI? (00:57:40)Could the AGs insist the current nonprofit agreement be made public? (00:59:15)How OpenAI is valued should be transparent and scrutinised (01:01:00)Investors aren't bad people, but they can't be trusted either (01:06:05)This episode was originally recorded on May 13, 2025.Video editing: Simon Monsour and Luke MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

15 Mai 1h 12min

The case for and against AGI by 2030 (article by Benjamin Todd)

More and more people have been saying that we might have AGI (artificial general intelligence) before 2030. Is that really plausible? This article by Benjamin Todd looks into the cases for and against, and summarises the key things you need to know to understand the debate. You can see all the images and many footnotes in the original article on the 80,000 Hours website.In a nutshell:Four key factors are driving AI progress: larger base models, teaching models to reason, increasing models’ thinking time, and building agent scaffolding for multi-step tasks. These are underpinned by increasing computational power to run and train AI systems, as well as increasing human capital going into algorithmic research.All of these drivers are set to continue until 2028 and perhaps until 2032.This means we should expect major further gains in AI performance. We don’t know how large they’ll be, but extrapolating recent trends on benchmarks suggests we’ll reach systems with beyond-human performance in coding and scientific reasoning, and that can autonomously complete multi-week projects.Whether we call these systems ’AGI’ or not, they could be sufficient to enable AI research itself, robotics, the technology industry, and scientific research to accelerate — leading to transformative impacts.Alternatively, AI might fail to overcome issues with ill-defined, high-context work over long time horizons and remain a tool (even if much improved compared to today).Increasing AI performance requires exponential growth in investment and the research workforce. At current rates, we will likely start to reach bottlenecks around 2030. Simplifying a bit, that means we’ll likely either reach AGI by around 2030 or see progress slow significantly. Hybrid scenarios are also possible, but the next five years seem especially crucial.Chapters:Introduction (00:00:00)The case for AGI by 2030 (00:00:33)The article in a nutshell (00:04:04)Section 1: What's driven recent AI progress? (00:05:46)How we got here: the deep learning era (00:05:52)Where are we now: the four key drivers (00:07:45)Driver 1: Scaling pretraining (00:08:57)Algorithmic efficiency (00:12:14)How much further can pretraining scale? (00:14:22)Driver 2: Training the models to reason (00:16:15)How far can scaling reasoning continue? (00:22:06)Driver 3: Increasing how long models think (00:25:01)Driver 4: Building better agents (00:28:00)How far can agent improvements continue? (00:33:40)Section 2: How good will AI become by 2030? (00:35:59)Trend extrapolation of AI capabilities (00:37:42)What jobs would these systems help with? (00:39:59)Software engineering (00:40:50)Scientific research (00:42:13)AI research (00:43:21)What's the case against this? (00:44:30)Additional resources on the sceptical view (00:49:18)When do the 'experts' expect AGI? (00:49:50)Section 3: Why the next 5 years are crucial (00:51:06)Bottlenecks around 2030 (00:52:10)Two potential futures for AI (00:56:02)Conclusion (00:58:05)Thanks for listening (00:59:27)Audio engineering: Dominic ArmstrongMusic: Ben Cordell

12 Mai 1h

Emergency pod: Did OpenAI give up, or is this just a new trap? (with Rose Chan Loui)

When attorneys general intervene in corporate affairs, it usually means something has gone seriously wrong. In OpenAI’s case, it appears to have forced a dramatic reversal of the company’s plans to sideline its nonprofit foundation, announced in a blog post that made headlines worldwide.The company’s sudden announcement that its nonprofit will “retain control” credits “constructive dialogue” with the attorneys general of California and Delaware — corporate-speak for what was likely a far more consequential confrontation behind closed doors. A confrontation perhaps driven by public pressure from Nobel Prize winners, past OpenAI staff, and community organisations.But whether this change will help depends entirely on the details of implementation — details that remain worryingly vague in the company’s announcement.Return guest Rose Chan Loui, nonprofit law expert at UCLA, sees potential in OpenAI’s new proposal, but emphasises that “control” must be carefully defined and enforced: “The words are great, but what’s going to back that up?” Without explicitly defining the nonprofit’s authority over safety decisions, the shift could be largely cosmetic.Links to learn more, video, and full transcript: https://80k.info/rcl4Why have state officials taken such an interest so far? Host Rob Wiblin notes, “OpenAI was proposing that the AGs would no longer have any say over what this super momentous company might end up doing. … It was just crazy how they were suggesting that they would take all of the existing money and then pursue a completely different purpose.”Now that they’re in the picture, the AGs have leverage to ensure the nonprofit maintains genuine control over issues of public safety as OpenAI develops increasingly powerful AI.Rob and Rose explain three key areas where the AGs can make a huge difference to whether this plays out in the public’s best interest:Ensuring that the contractual agreements giving the nonprofit control over the new Delaware public benefit corporation are watertight, and don’t accidentally shut the AGs out of the picture.Insisting that a majority of board members are truly independent by prohibiting indirect as well as direct financial stakes in the business.Insisting that the board is empowered with the money, independent staffing, and access to information which they need to do their jobs.This episode was originally recorded on May 6, 2025.Chapters:Cold open (00:00:00)Rose is back! (00:01:06)The nonprofit will stay 'in control' (00:01:28)Backlash to OpenAI’s original plans (00:08:22)The new proposal (00:16:33)Giving up the super-profits (00:20:52)Can the nonprofit maintain control of the company? (00:24:49)Could for profit investors sue if profits aren't prioritised? (00:33:01)The 6 governance safeguards at risk with the restructure (00:34:33)Will the nonprofit’s giving just be corporate PR for the for-profit? (00:49:12)Is this good, or not? (00:51:06)Ways this could still go wrong – but reasons for optimism (00:54:19)Video editing: Simon Monsour and Luke MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

8 Mai 1h 2min